封面:Singular Value Decomposition (SVD) in Python - AskPython

eigenvalue decomposition for symmetric matrix

令 A∈Rn×n 为一个对称矩阵(i.e.A⊤=A). 那么存在

A=UΛU⊤

其中

U=[u1,u2,⋯,un]∈Rn×n is orthogonalΛ=λ1λ2⋱λn∈Rn×n is a diagonal matrix

且λi,i=1,⋯,n是A的特征值,ui,i=1,⋯,n是对应的特征向量

proof

-

证明所有A的特征值均为实数

-

通过归纳法证明存在A=UΛU⊤

如果n=1

A=1⋅A⋅1

假设对于任意Ak∈Rk×k 存在一个decomposition:Ak=UkΛkUk⊤

对于任意的Ak+1∈R(k+1)×(k+1),令(λ,u)是Ak+1的一个特征值-特征向量满足Ak+1u=λu,∥u∥2=1

因为u∈Rk+1是单位向量,因此可以将其扩展成一个Rk+1的正交基, denoted by Q=[u,P]

Q⊤Ak+1Q=[u⊤P⊤]Ak+1[uP]=[u⊤Ak+1uP⊤Ak+1uu⊤Ak+1PP⊤Ak+1P]

其中,

u⊤Ak+1uP⊤Ak+1uu⊤Ak+1P(P⊤Ak+1P)⊤=u⊤(λu)=λu⊤u=λ=P⊤(λu)=λP⊤u=0=(P⊤Ak+1u)⊤=0⊤=0=P⊤Ak+1⊤P=P⊤Ak+1P⇒P⊤Ak+1P is symmetric

根据归纳法的假设,存在S,D∈Rn×n满足

P⊤Ak+1P=SDS⊤

因此

Q⊤Ak+1Q=[λ00SDS⊤]=[1S][λD][1S⊤]Ak+1=Q[1S][λD][1S⊤]Q⊤=Uk+1Λk+1Uk+1⊤

-

接下来证明Λ是特征值,U是对应的特征向量

A=UΛU⊤⇔AU=UΛ⇒A[u1,⋯,un]=[u1,⋯,un]Λ=[λ1u1,⋯λnun]⇔Aui=λiui

Singular value decomposition

令A∈Rm×n, 其中 m≥n,则A⊤A 是symmetric的

令A⊤A=VΛV⊤是AA⊤的一个特征值分解,其中

V∈Rn×ns.t.V⊤V=VV⊤=IΛ=diag(λ1,⋯,λn)∈Rn×n是一个对角矩阵,其中λ1≥λ2≥⋯≥λn

-

由于A⊤A是SPSD的,因此λ1≥λ2≥⋯≥λn≥0

-

wi为AV∈Rm×n的第i列,则

wi⊤wj=(AVei)⊤(AVej)=ei⊤V⊤A⊤AVej=ei⊤(V⊤V)Λ(V⊤V)ej={λi0i=ji=j

因此AV的列是正交的

-

定义σi=∥wi∥2=wi⊤wi=λi,ui=wi/∥wi∥2

U=[u1u2⋯un]∈Rm×nΣ=diag(σ1,⋯,σn)∈Rn×n

则U⊤U=I(UU⊤=I unless m=n),且UΣ=[w1,⋯,wn]=AV

→A=UΣV⊤

综上,A=UΣV⊤,定义p=min{m,n}其中

U∈Rm×p s.t. U⊤U=IV∈Rp×p s.t. V⊤V=IΣ∈Rn×p 是一个对角矩阵满足 σ1≥σ2≥⋯≥σn≥0

properties of SVD

-

{AVA⊤U=UΣ=VΣ{AviA⊤ui=σiui=σivi

-

{A⊤AviAA⊤ui=σi2vi=σi2ui

-

SVD是矩阵分析中有用的工具

-

rank(A)= 非零特征值数量

-

Ran(A)=span(u1,⋯,ur),其中r=rank(A)

proof

Ran(A)={Ax∣x∈Rn}={UΣVTxx∈Rn}={i=1∑pσiui(viTx)x∈Rn}=⎩⎨⎧i:σi=0∑σi(viTx)⋅ui∣x∈Rn}=span{u1,u2,…,ur}⎭⎬⎫

-

Ker(A)=span{v1,v2,⋯,vr}⊥

-

∥A∥2=σ1, ∥A∥F=∑i=1pσi

proof

∥A∥2=∥x∥2=1max∥Ax∥2=(∥x∥2=1max∥Ax∥22)1/2=(∥x∥2=1maxxTATAx)1/2=(∥x∥2=1maxxTVΣ2V⊤x)1/2=(∥x∥2=1maxy⊤Σ2y)1/2=(σ12)1/2=σ1

由于V是正交矩阵,因此∥V⊤x∥=1

∥A∥F=(⟨A,A⟩)1/2=(⟨UΣV⊤,UΣV⊤⟩)1/2=(tr(VΣU⊤UΣV⊤))1/2=(tr(VΣ2VT))1/2=(tr(Σ2V⊤V))1/2=(tr(Σ2))1/2=(σ12+σ22+⋯+σp2)1/2.

-

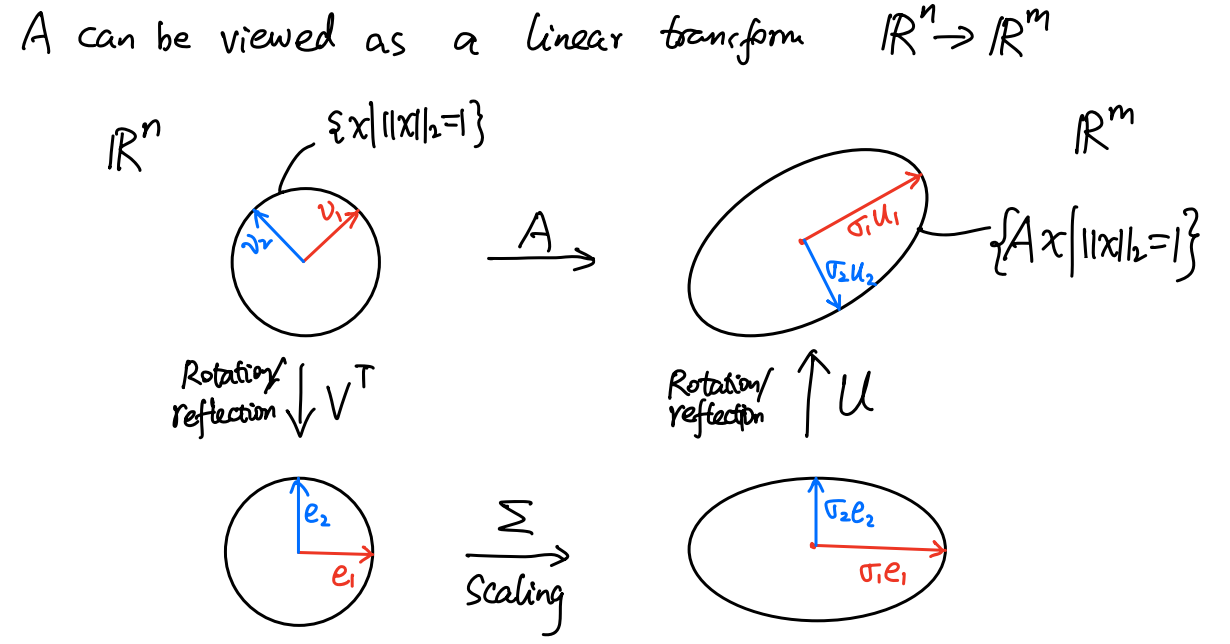

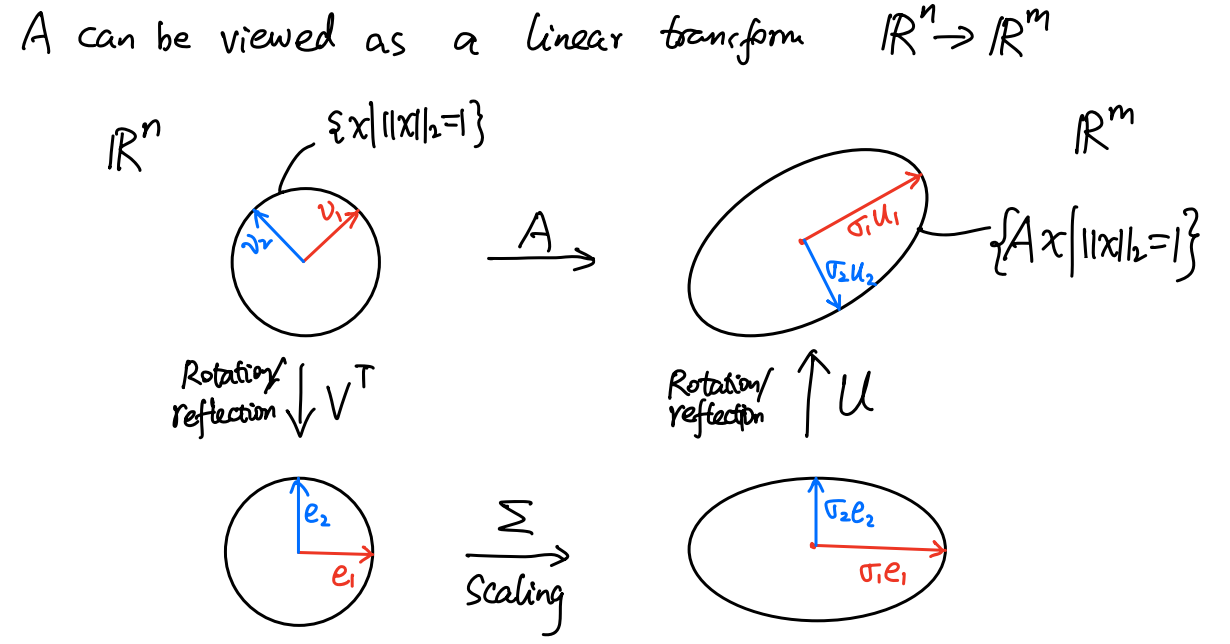

几何意义

-

SVD是关于一个矩阵的最优的低秩近似

A∈Rm×n,A=UΣV⊤,则可以将A表示为:

A=i=1∑pσiuivi⊤

令k是一个正整数满足k≤p,定义

Ak=i=1∑kσiuivi⊤

则

Ak=B:rank(B)≤kargmin∥A−B∥2Ak=B:rank(B)≤kargmin∥A−B∥F

proof: 2-norm case

∥A−Ak∥2=i=1∑pσiuivi⊤−i=1∑kσiuivi⊤2=i=k+1∑pσiuivi⊤2=σk+1

接下来希望证明对于任意B:rank(B)≤k有

∥A−B∥2≥σk+1

不妨设p=n(i.e.n≤m)。由于rank(B)≤k,

rank(B[v1,⋯,vk+1])≤rank(B)≤k

因此

Bdefine w[v1v2⋯vk+1]c1c2⋮ck+1=0

存在一个非0解。不妨设∥w∥2=1(i.e.∑i=1k+1ci2=1)

∥A−B∥22≥(1)∥(A−B)w∥22=∥Aw∥22=i=1∑k+1ciAvi22=(2)i=1∑k+1ciσiui22=(3)i=1∑k+1ci2σi2≥i=1∑k+1ci2σk+12=σk+12

其中(1)使用了∥AB∥2≤∥A∥2∥B∥2

(2)使用了SVD的性质1

(3)成立是因为U的列向量是正交的

i=1∑k+1ciσiui22=i=1∑k+1j=1∑k+1cicjσiσj⟨ui,uj⟩

Application

A≈LR⊤

其中L∈Rm×k,R∈Rk×n。

PCA

L∈Rm×k,R∈Rn×kmin∥A−LR⊤∥F2⇔M:rank(M)≤kmin∥A−M∥F2

The solution is given by k-truncated SVD of A

Column Subset Selection

L: A的一些列,R∈Rn×k

L:k−columns of AR∈Rn×kmin∥A−LR⊤∥F2⇔S⊂{1,⋯,m}∣S∣=kR∈Rn×kmin∥A−AsAs†A∥F2

PRQR gives a good approximate solution

Non Negtive Matrix Factorization

L∈R+m×kR∈R+k×nmin∥A−LR⊤∥F2

to be continue.